Robustness of decision rules to shifts in the data-generating process is crucial to the successful deployment of decision-making systems, since they have to be applied to input points outside of the data distribution they were trained on. Local adversarial robustness guarantees that the prediction does not change in some vicinity of a specific input point, whereas we are instead interested in distribution shifts. Such shifts can be viewed as interventions on a causal graph, which capture (possibly hypothetical) changes in the data-generating process, whether due to natural reasons or by the action of an adversary.

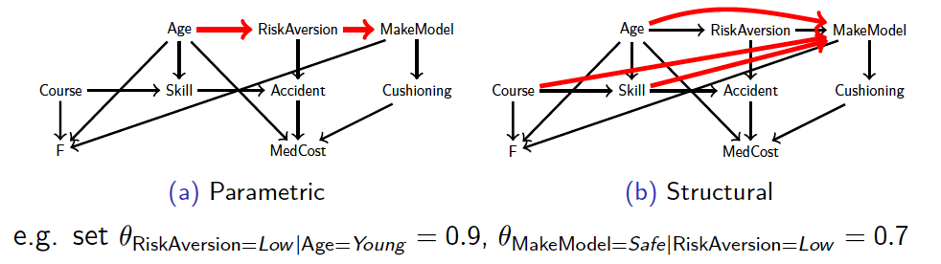

The diagram shows a causal Bayesian network of an insurance model under two types of such shifts, parametric (change of conditional probability values) and structural (removal/addition of causal links).

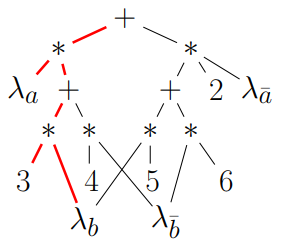

We formally define the interventional robustness problem, a novel model-based notion of robustness for decision functions that measures worst-case performance with respect to a set of interventions that denote changes to parameters and/or causal influences. While the problem itself is exponential, by relying on a tractable representation of Bayesian networks as arithmetic circuits, also in the presence of data uncertainty, we provide efficient algorithms for computing guaranteed upper and lower bounds on the interventional robustness probabilities. To this end, we exploit the efficient compilation of Bayesian networks into arithmetic circuits (see image), and compile the decision function and the data-generating process jointly.

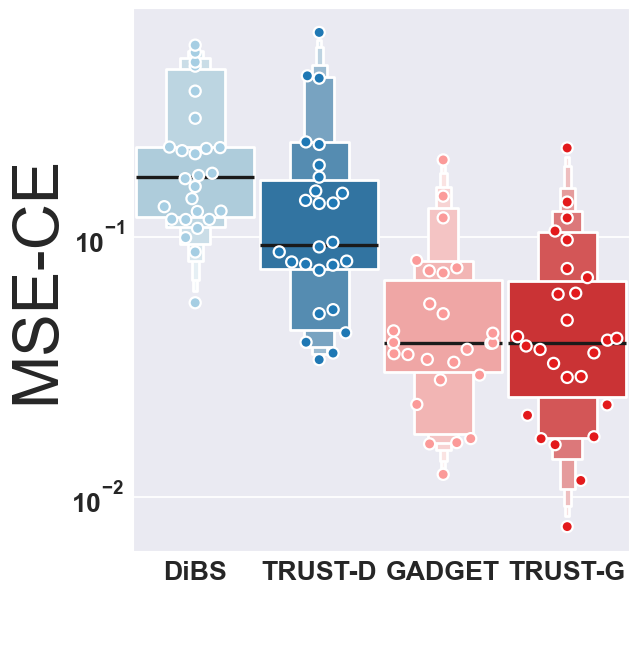

Bayesian structure learning allows one to capture uncertainty over the causal directed acyclic graph (DAG) responsible for generating given data. We develop a TRUST (Tractable Uncertainty for STructure learning) framework, which supports approximate posterior inference that relies on probabilistic circuits as the representation of posterior belief. In contrast to sample-based posterior approximations, our representation can capture a much richer space of DAGs, while being able to tractably answer a range of useful inference queries. We empirically show how probabilistic circuits can be used as an augmented representation for structure learning methods, leading to improvement in both the quality of inferred structures and posterior uncertainty, and show how causality queries can be estimated.

The image shows MSE (Mean Squared Error) of Causal Effects (lower is better) for TRUST in comparison with state of the art.