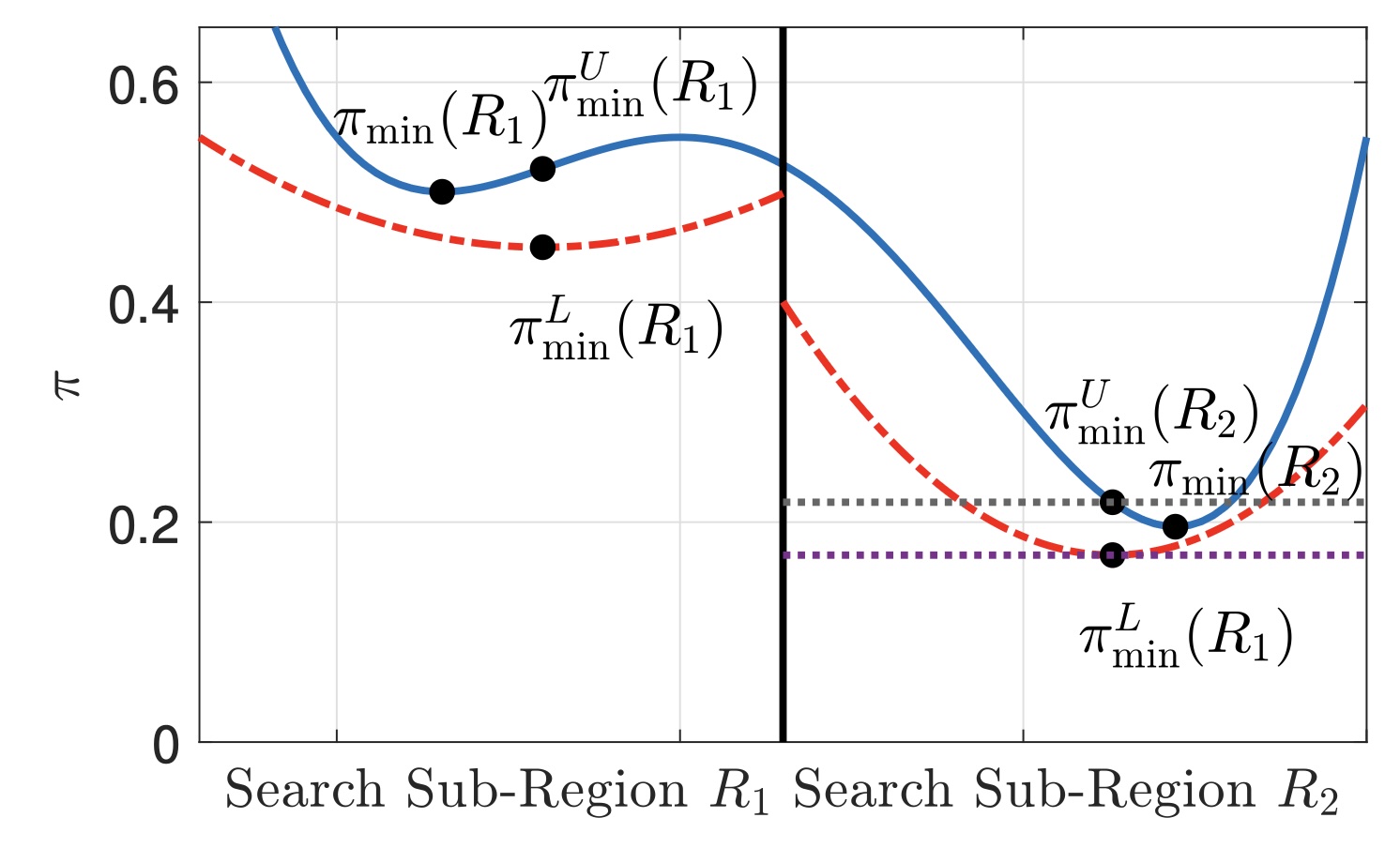

We study adversarial robustness for Gaussian process models, defined as invariance of the model’s decision to bounded perturbations, in contrast to distributional robustness. We develop a comprehensive theory, anytime algorithms and implementation based on branch-and-bound optimisation for computing provable guarantees of adversarial robustness of Gaussian process models, for both multi-class classification and regression. This involves computing lower and upper bounds on its prediction range. The image illustrates the working of the method, where a region R is refined into R1 and R2 to improve the bounds.

Since adversarial examples are arguably intuitively related to uncertainty, Bayesian neural networks (BNNs), i.e., neural networks with a probability distribution placed over their weights and biases, have the potential to provide stronger robustness properties. BNNs also enable principled evaluation of model uncertainty, which can be taken into account at prediction time to enable safe decision making. We study probabilistic safety for BNNs, defined as the probability that for all points in a given input set the prediction of the BNN is in a specified safe output set. In adversarial settings, this translates into computing the probability that adversarial perturbations of an input result in small variations in the BNN output, which represents a probabilistic variant of local robustness for deterministic neural networks.

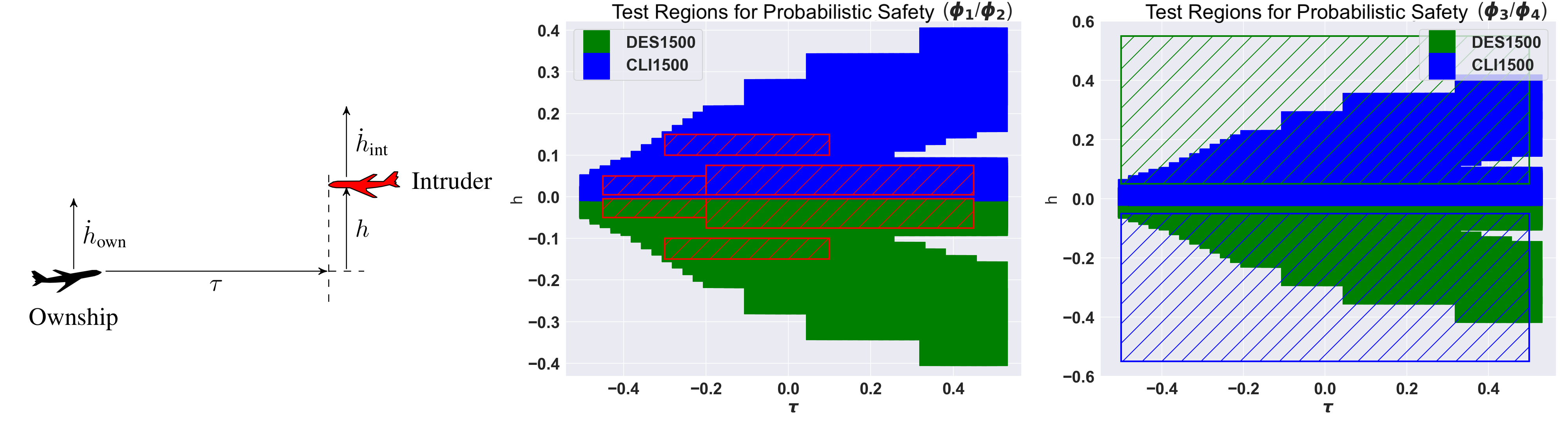

We propose a framework based on relaxation techniques from non-convex optimisation (interval and linear bound propagation) for the analysis of probabilistic safety for BNNs with general activation functions and multiple hidden layers. We evaluate the methods on the VCAS autonomous aircraft controller. The image shows the geometry of VCAS (left), visualisation of ground truth labels (centre) and the computed safe regions (right).

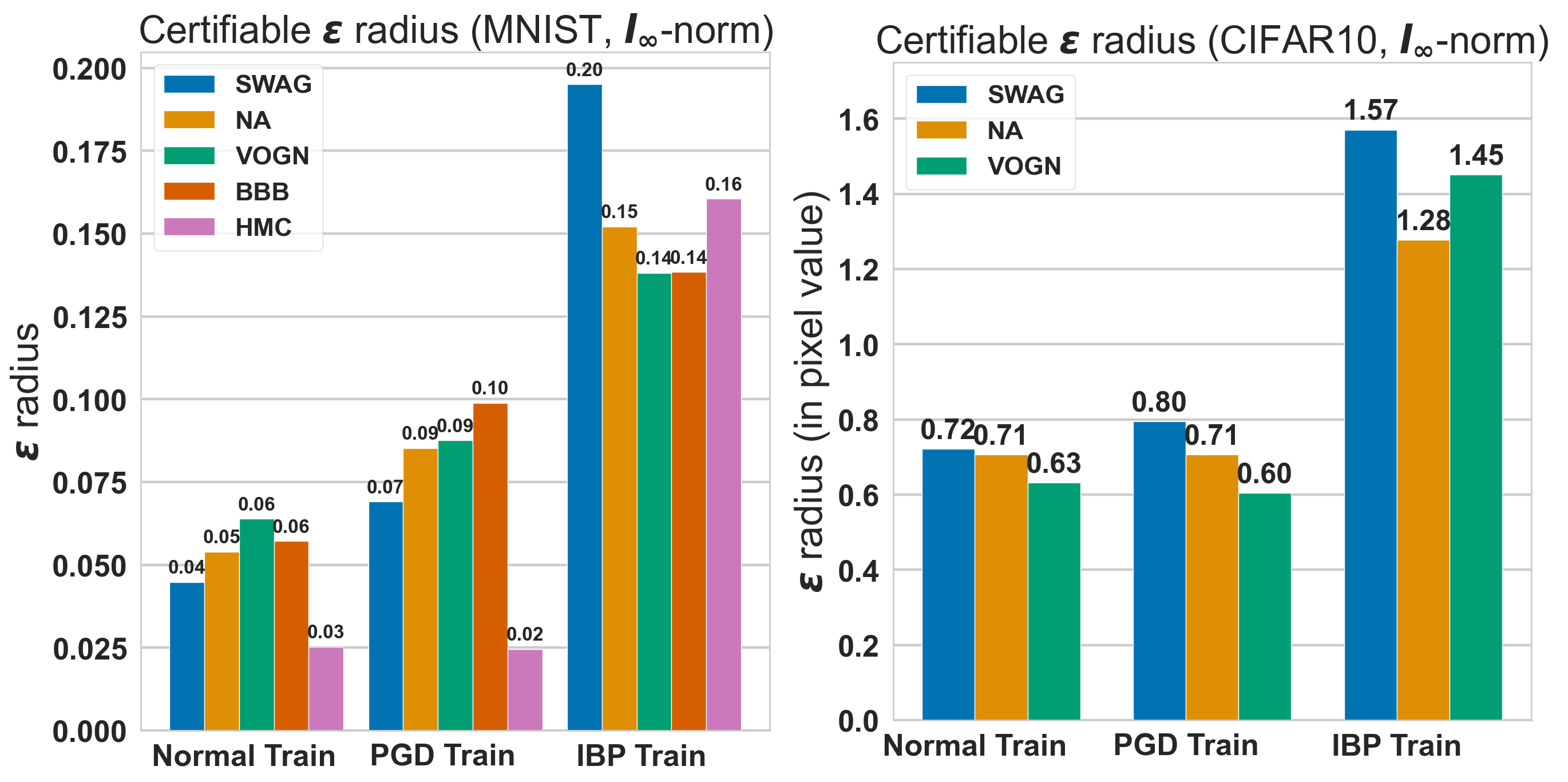

We develop the first principled framework for adversarial training of Bayesian neural networks (BNNs) with certifiable guarantees, enabling applications in safety-critical contexts. We rely on techniques from constraint relaxation of nonconvex optimisation problems and modify the standard cross-entropy error model to enforce posterior robustness to worst-case perturbations in ϵ-balls around input points.

The plot shows the average certified radius for images from MNIST (right), and CIFAR-10 (left) using CNN-Cert. We observe that robust training with IBP (Interval Bound Propagation) roughly doubles the maximum verifiable radius compared with standard training and that obtained by training on PGD adversarial examples.

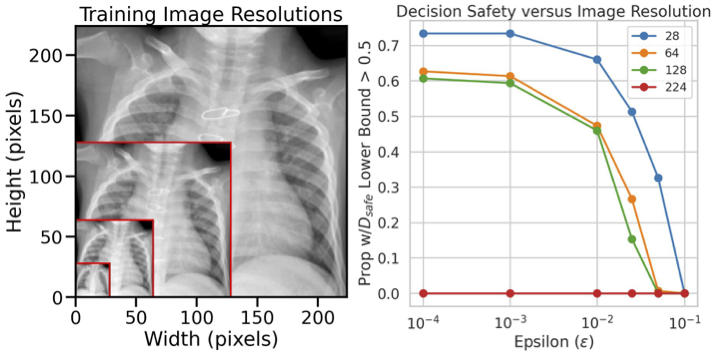

We study the problem of certifying the robustness of Bayesian neural networks (BNNs) to adversarial input perturbations. We define two notions of robustness for BNNs in an adversarial setting: probabilistic robustness and decision robustness. Probabilistic robustness is the probability that for all points in a given input set T the output of a BNN sampled from the posterior is in a safe set S. On the other hand, decision robustness considers the optimal decision of a BNN and checks if for all points in T the optimal decision of the BNN for a given loss function lies within the output set S. Although exact computation of these robustness properties is challenging due to the probabilistic and non-convex nature of BNNs, we present a unified computational framework for efficiently and formally bounding them, and evaluate the effectiveness of our methods on various regression and classification tasks.

The image shows different training image resolutions on a training image sample from the PneumoniaMNIST dataset (left), for which we plot (right) computed lower bounds on decision robustness as we vary the resolution.

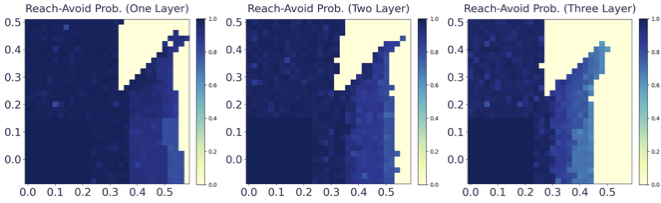

Model-based reinforcement learning seeks to simultaneously learn the dynamics of an unknown stochastic environment and synthesise an optimal policy for acting in it. Ensuring the safety and robustness of sequential decisions made through a policy in such an environment is a key challenge for policies intended for safety-critical scenarios. In this work, we investigate two complementary problems: first, computing reach-avoid probabilities for iterative predictions made with dynamical models, with dynamics described by Bayesian neural network (BNN); second, synthesising control policies that are optimal with respect to a given reach-avoid specification (reaching a "target" state, while avoiding a set of "unsafe" states) and a learned BNN model. The computed lower bounds provide safety certification for the given policy and BNN model. We then introduce control synthesis algorithms to derive policies maximizing said lower bounds on the safety probability. We demonstrate the effectiveness of our method on a series of control benchmarks characterized by learned BNN dynamics models.

The images show the lower-bound reach-avoid probabilities for a BNN dynamics model as vary the depth from one to two and three later BNNs.

To know more about these models and analysis techniques, follow the links below.