The deployment of autonomous robots of autonomous robots promises to improve human lifespan, productivity and well-being. However, successful integration of these robots into our society is challenging, as demonstrated by a fatal crash involving Tesla thought to be caused by the driver placing excessive trust in the vehicle. Ensuring smooth, safe and beneficial collaboration between humans and robots will require machines to understand our motivations and complex mental attitudes such as trust, guilt or shame, and be able to mimic them. Robots can take an active role in reducing misuse if they are able to detect human biases, inaccurate beliefs or overtrust, and make accurate predictions of human behaviour.

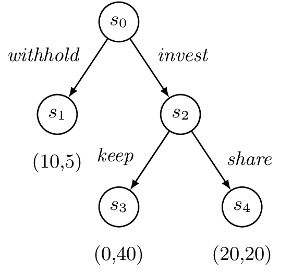

This work investigates a cognitive extension of stochastic multiplayer games, a novel parametric framework for multi-agent human-like decision making, which aims to capture human motivation through mental, as well as physical, goals. Additionally, limitations of human reasoning are captured via a parameterisation of each agent, which also allows us to represent the wide variety of people’s personalities. Mental shortcuts homo sapiens employ to make sense of the complexity of the external environment are captured by equipping agents with heuristics. Our framework enables expression of cognitive notions such as trust in terms of beliefs, whose dynamics is affected by agent’s observation of interactions and own preferences.

Along with the theoretical model, we provide a tool, implemented using probabilistic programming, that allows one to specify cognitive models, simulate their execution, generate quantitative behavioural predictions and learn agent characteristics from data.

We have designed and conducted an experiment that has human participants playing the classical Trust Game [https://www.frontiersin.org/articles/10.3389/fnins.2019.00887/full] against a custom bot whose decision making is driven by our cognitive stochastic game framework. The roles of investor and investee are assigned at random, and the bot is able to play in either. Results show that predictions of human behaviour generated by the framework are on par with, and in some circumstances superior to, the state of the art. Moreover, our study demonstrates that using an informed prior about the participants’ preferences and cognitive abilities, collected through a simple questionnaire, can significantly improve the accuracy of behavioural predictions. Further, we find evidence for subjects overtrusting the bot, confirming what has been hypothesised for Tesla drivers. On the other hand, the vast majority of participants displayed a high degree of opportunism when interacting with the bot, suggesting machines are not treated on an equal footing.

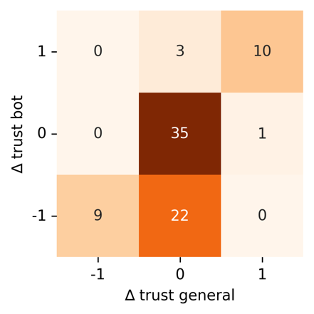

The image depicts a confusion matrix showing how change of trust towards the bot (y axis) translates into trust change towards robots generally (x axis). Values −1, 0 and 1 represent participants reporting a decrease, no change and an increase of trust, respectively. The data (Amazon Mechanical Turk) is available here.

Controller synthesis techniques have been developed that account for uncertain human preferences in a multi-objective context. The image shows an example map for robotic planning in urban search and rescue missions. The robot aims to navigate to the victim (star) location with the shortest distance while minimizing the risk of bypassing (red) fire zones.

To know more about these models and analysis techniques, follow the links below.

Software:CognitiveAgents